Just believing that an AI is helping boosts your performance

People perform better if they think they have an AI assistant – even when they’ve been told it’s unreliable and won’t help them. Lue tämä suomeksi

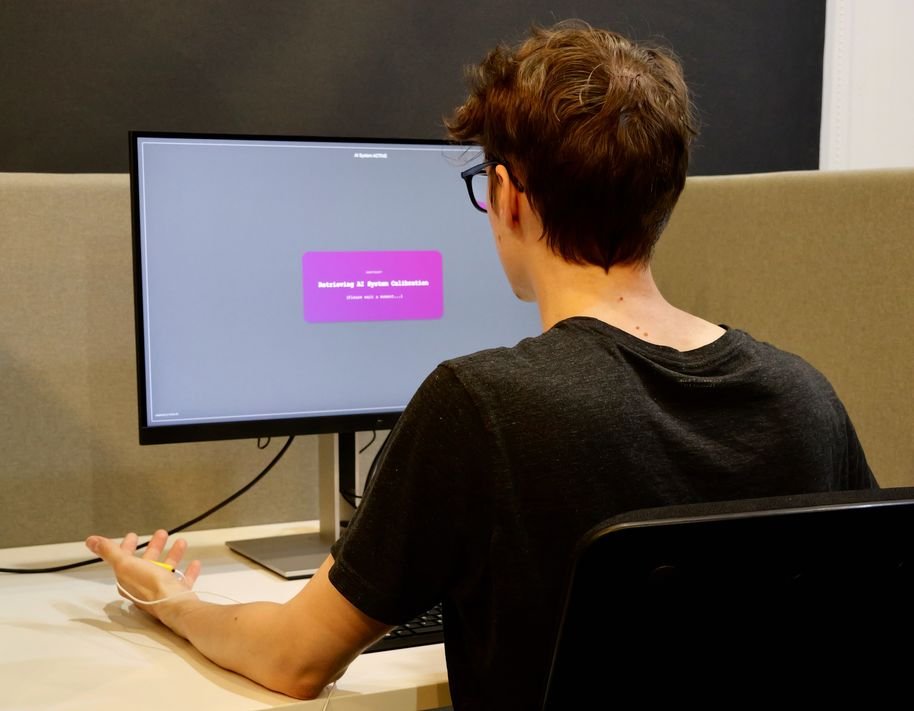

Participants were asked to perform a task once on their own and once supposedly aided by an AI system. Image: Otso Haavisto / Aalto University

Sometimes it just seems like an AI is helping, but the benefit is actually a placebo effect – people performing better simply because they expect to be doing so – according to new research from Aalto University. The study also shows how difficult it is to shake people’s trust in the capabilities of AI systems.

In this study, participants were tasked with a simple letter recognition exercise. They performed the task once on their own and once supposedly aided by an AI system. Half of the participants were told the system was reliable and it would enhance their performance, and the other half was told that it was unreliable and would worsen their performance.

‘In fact, neither AI system ever existed. Participants were led to believe an AI system was assisting them, when in reality, what the sham-AI was doing was completely random,’ explains doctoral researcher Agnes Kloft.

The participants had to pair letters that popped up on screen at varying speeds. Surprisingly, both groups performed the exercise more efficiently – more quickly and attentively – when they believed an AI was involved.

‘What we discovered is that people have extremely high expectations of these systems, and we can’t make them AI doomers simply by telling them a program doesn’t work,’ says Assistant Professor Robin Welsch.

Following the initial experiments, the researchers conducted an online replication study that produced similar results. They also introduced a qualitative component, inviting participants to describe their expectations of performing with an AI. Most had a positive outlook toward AI and, surprisingly even skeptical people still had positive expectations about its performance.

The findings pose a problem for the methods generally used to evaluate emerging AI systems. ‘This is the big realization coming from our study – that it’s hard to evaluate programmes that promise to help you because of this placebo effect’, Welsch says.

While powerful technologies like large language models undoubtedly streamline certain tasks, subtle differences between versions may be amplified or masked by the placebo effect – and this is effectively harnessed through marketing.

The results also pose a significant challenge for research on human-computer interaction, since expectations would influence the outcome unless placebo control studies were used.

‘These results suggest that many studies in the field may have been skewed in favor of AI systems,’ concludes Welsch.

The researchers will present the study at the renowned CHI-conference on May 14. The research paper can be found here.

This article was originally published on the Aalto University website.